Running Kubernetes in production is no small feat—especially when it comes to maintaining performance, optimizing costs, and ensuring scalability. One of the key levers in achieving this balance is autoscaling. While Kubernetes provides built-in tools to scale workloads automatically, simply enabling them isn’t enough. At Kapstan, we’ve helped teams build robust, cost-efficient autoscaling strategies that align with real-world production demands.

In this blog, we’ll explore the best practices for Kubernetes autoscaling in production environments—so you can scale confidently, without surprises.

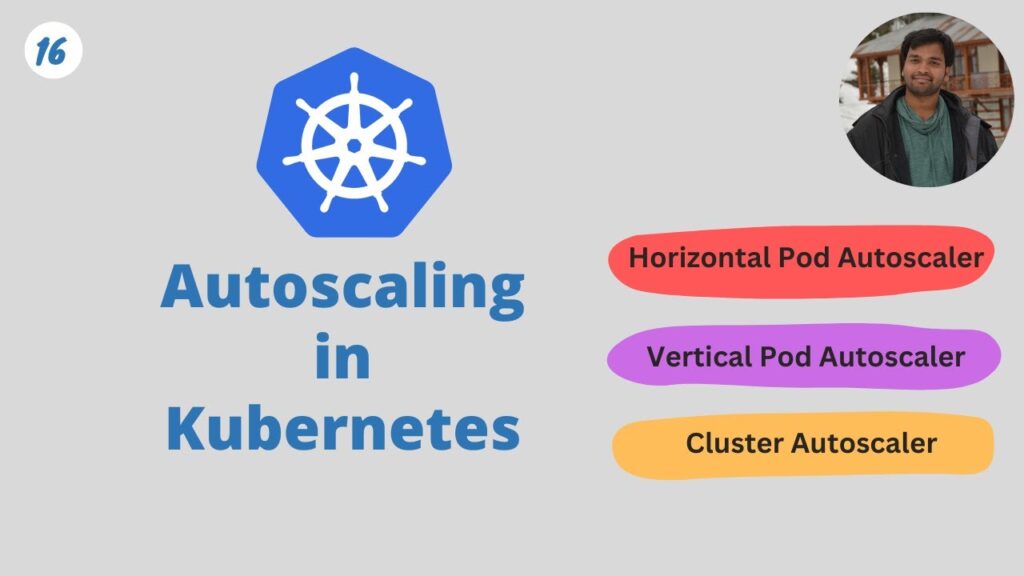

Why Kubernetes Autoscaling Matters

Autoscaling allows Kubernetes to adjust resource usage dynamically based on real-time metrics. This includes:

- Horizontal Pod Autoscaler (HPA): Scales the number of pod replicas based on CPU, memory, or custom metrics.

- Vertical Pod Autoscaler (VPA): Adjusts pod resource requests (CPU/memory) up or down.

- Cluster Autoscaler (CA): Scales the number of nodes in your cluster by interacting with the underlying infrastructure.

- Karpenter (on AWS): An intelligent alternative to CA that makes decisions based on workload needs in near real-time.

For production environments, the stakes are higher. Autoscaling done right leads to better reliability, performance, and cost savings. Done wrong, it can result in latency spikes, pod evictions, and even outages.

Best Practices for Kubernetes Autoscaling in Production

1. Set Realistic Resource Requests and Limits

Kubernetes scheduling is based on resource requests, not actual usage. If you under-provision requests, your pods may be starved for resources. If you over-provision, autoscaling becomes ineffective because the scheduler assumes you need more resources than you actually do.

Best practice:

Use monitoring tools (like Prometheus or Datadog) to observe actual usage and adjust resource requests accordingly. At Kapstan, we often recommend establishing baseline resource profiles during load testing before going live.

2. Use the Right Metrics for HPA

The default CPU-based HPA is not always sufficient. For example, web services that are I/O bound might not use much CPU but can still experience high latency.

Best practice:

Use custom metrics through the Kubernetes Metrics API or Prometheus Adapter. You can scale based on request rate, queue length, or any relevant business metric.

3. Avoid Scaling Loops with Stabilization Windows

Without proper configuration, HPA can oscillate—scaling up and down rapidly in response to metrics.

Best practice:

Set stabilization windows and cooldown periods to prevent flapping. These settings allow the autoscaler to wait before acting on transient spikes.

4. Combine HPA with Cluster Autoscaler or Karpenter

Scaling pods with HPA is only effective if your cluster has the nodes to support them. Without a node-level autoscaler, HPA might fail due to insufficient resources.

Best practice:

Deploy Cluster Autoscaler (or Karpenter on AWS) in tandem with HPA. This ensures that as more pods are needed, the cluster scales accordingly at the infrastructure level.

At Kapstan, we prefer Karpenter for AWS-based workloads because of its faster scaling decisions and improved bin-packing efficiency compared to the traditional Cluster Autoscaler.

5. Monitor and Alert on Autoscaling Events

Blindly trusting autoscaling logic is risky. If autoscaling fails due to misconfiguration or quota limits, you might not know until your application suffers.

Best practice:

Set up logging and alerting for all autoscaling activities. Tools like Prometheus, Grafana, and CloudWatch can help you visualize trends and receive notifications when thresholds are breached.

6. Use Pod Disruption Budgets (PDBs)

In high-availability environments, autoscaling can inadvertently reduce replicas during scale-down, affecting uptime.

Best practice:

Use Pod Disruption Budgets to ensure a minimum number of pods remain available during voluntary disruptions, including scaling events.

7. Test Autoscaling Behavior Before Production

Autoscaling can be unpredictable under real-world loads. Assumptions made in staging might break down in production.

Best practice:

Run performance/load tests that simulate real traffic patterns. At Kapstan, we integrate autoscaling behavior checks as part of CI/CD pipelines so issues can be detected before deployment.

8. Limit Scale-Up and Scale-Down Rates

Spiky workloads can cause excessive node churn, which increases cloud costs and adds overhead.

Best practice:

Set max surge, max unavailable, and scale-down policies to fine-tune the aggressiveness of autoscaling and ensure it aligns with your workload tolerance.

Autoscaling Isn’t Set-and-Forget

While Kubernetes autoscaling tools offer a lot of flexibility, getting them right requires continuous tuning. Your traffic patterns, infrastructure costs, and application behavior all evolve—and your autoscaling strategy should too.

At Kapstan, we specialize in designing intelligent autoscaling solutions that align with your performance goals and budget. Whether you’re using EKS, GKE, or AKS, our team can help you fine-tune autoscaling to scale just right—no more, no less.

Final Thoughts

Kubernetes autoscaling is powerful, but it’s not magic. It requires a thoughtful balance between application performance, infrastructure limits, and cost management. By following the best practices outlined above—and continuously monitoring and testing—you can ensure your workloads scale reliably under pressure.

- Best Practices for Kubernetes Autoscaling in Production - kapstan

- Discover Kubernetes autoscaling best practices for production workloads. Learn how Kapstan helps teams scale efficiently with HPA, VPA, and Karpenter.

- Kubernetes Autoscaling

Related posts:

How Much Does a Professional Website Cost in Malaysia? (2025 Pricing Guide)

How Much Does a Professional Website Cost in Malaysia? (2025 Pricing Guide)

Why Offline Music Is Still Essential in a Streaming-First World

Why Offline Music Is Still Essential in a Streaming-First World

What Makes the Best HR Management WordPress Theme for Your Business?

What Makes the Best HR Management WordPress Theme for Your Business?

Top 10 Web Design Agencies in Dublin, Ireland for E-commerce Success

Top 10 Web Design Agencies in Dublin, Ireland for E-commerce Success

How to Set up an Online WooCommerce Store Easily – Step by Step

How to Set up an Online WooCommerce Store Easily – Step by Step

Best Free and Secure WordPress Themes for Charity Foundations

Best Free and Secure WordPress Themes for Charity Foundations

Dubai’s Creative Engine: How Inoventive 3D is Shaping the Future of 3D Printing in the UAE

Dubai’s Creative Engine: How Inoventive 3D is Shaping the Future of 3D Printing in the UAE

Integrating CRM and ERP Tools Through Custom Web Development

Integrating CRM and ERP Tools Through Custom Web Development